Shape/texture coding in macaque visual cortex

With: Zejin Lu,

Daniel Anthes,

Tim Kietzmann

Summary: Many studies suggest that the ventral visual stream's responses are mostly driven by texture information. Mostly this is done by distorting the shape elements and assessing the response. Instead, we pit shape against texture (cue-conflict images) and assess which factor drives the response.

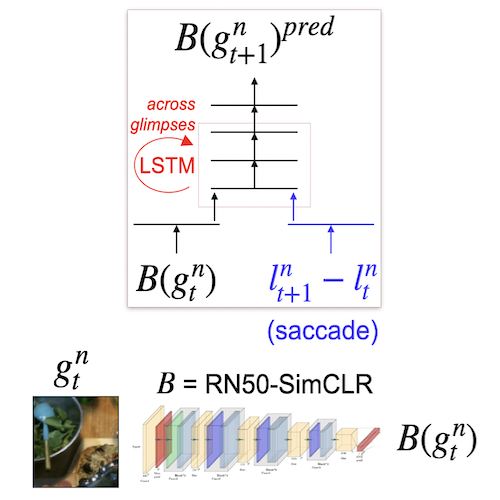

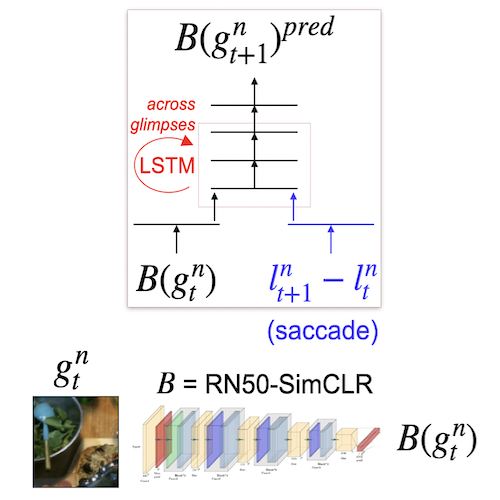

Relational representations via glimpse prediction

With: Linda Ventura,

Tim Kietzmann, et al.

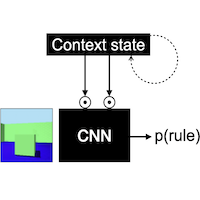

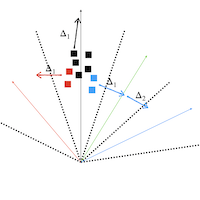

Summary: Inspired by

Summerfield et al. 2020, research on RF remapping, and predictive vision, we evaluate the usefulness of predicting the content of the next glimpse towards generating scene representations that bear relational information about its constituents.

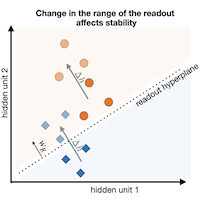

Representational drift in macaque visual cortex

With: Daniel Anthes,

Peter König,

Tim Kietzmann

Summary: Employing tools developed during our investigations into continual learning, we study if representational drift occurs in macaque visual cortex and how that multi-area system deals with changing representations.

Glimpse prediction for human-like scene representation

With: Adrien Doerig

With: Adrien Doerig,

Tim Kietzmann, et al.

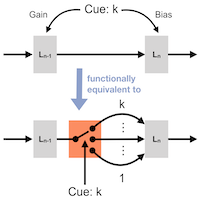

Summary: During eye movements, predicting the next glimpse features, given the saccade, coaxes a neural network to encode the co-occurrence and spatial arrangement of parts of natural scenes in a visual-cortex-aligned scene representation.

Comments: Preprint

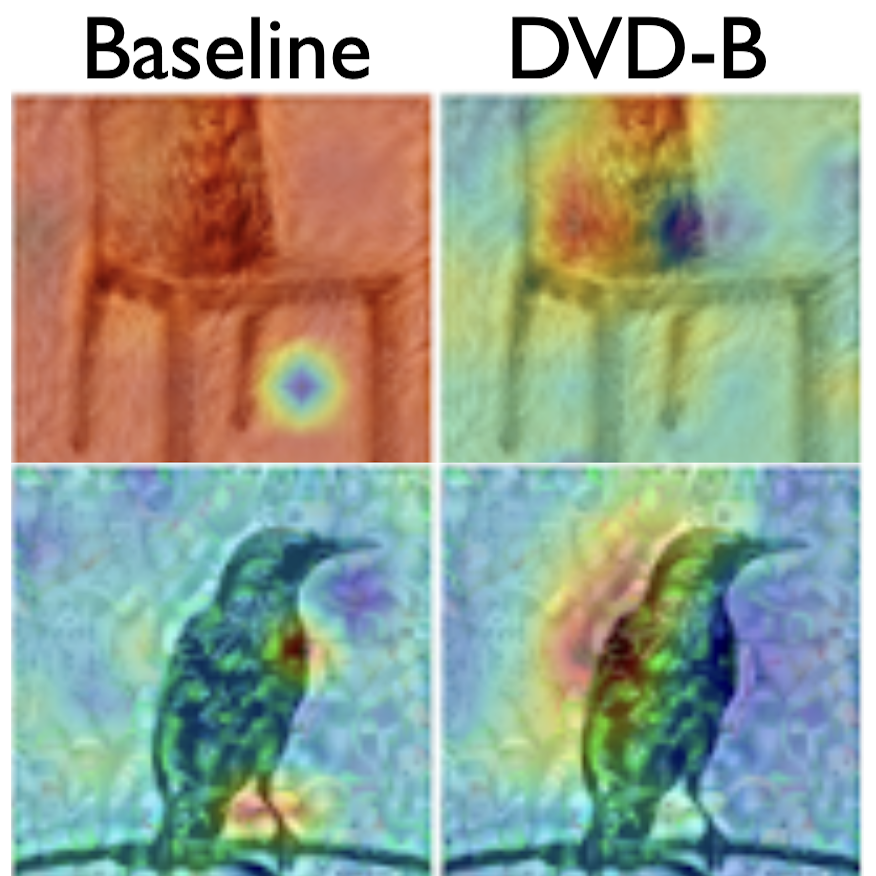

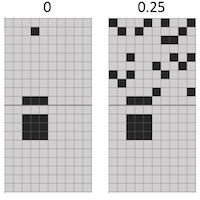

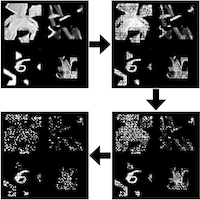

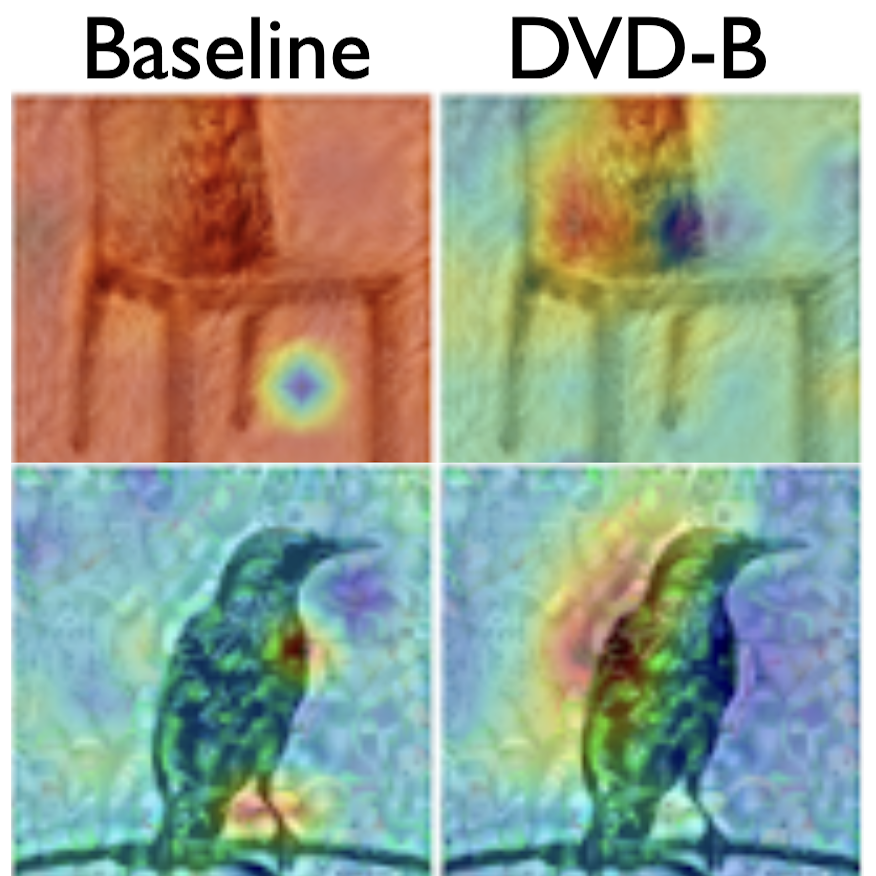

Developmentally-inspired shape bias in artificial neural networks

With: Zejin Lu

With: Zejin Lu,

Radoslaw Cichy,

Tim Kietzmann

Summary: Inspired by the

Adaptive Initial Degradation hypothesis, we trained ANNs with a graded coarse-to-fine image diet and found that their classification behavior becomes highly shape-biased! This setup also confers distortion and adversarial robustness.

Comments: Preprint, under review at NMI.

Perception of rare inverted letters among upright ones

With: Jochem Koopmans

With: Jochem Koopmans,

Genevieve Quek,

Marius Peelen

Summary: In a Sperling-like task where the letters are mostly upright, there is a general tendency to report occasionally-present and absent inverted letters as upright to the same extent. Previously reported expectation-driven illusions might be post-perceptual.

Publication: ConsCog'26 paper

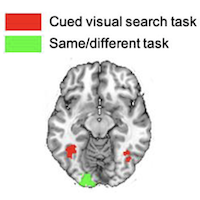

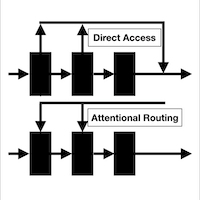

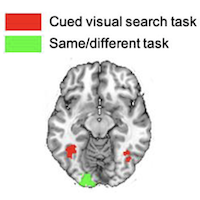

Task-dependent characteristics of neural multi-object processing

With: Lu-Chun Yeh

With: Lu-Chun Yeh,

Marius Peelen

Summary: The association between the neural processing of multi-object displays and the representations of those objects presented in isolation is task-dependent: same/different judgement relates to earlier, and object search to later stages in MEG/fMRI signals.

Publication: JNeurosci'24 paper

Comments: JNeurosci paper in brief

Statistical learning of distractor co-occurrences facilitates visual search

With: Genevieve Quek

With: Genevieve Quek,

Marius Peelen

Summary: Efficient visual search relies on the co-occurrence statistics of distractor shapes. Increased search efficiency among co-occurring distractors is probably driven by faster and/or more accurate rejection of a distractor's partner as a possible target.

Publication: JOV'22 paper

Comments: JOV paper in brief

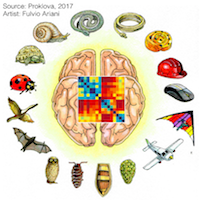

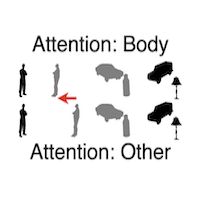

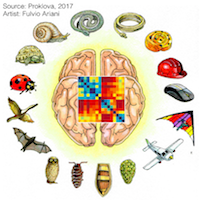

The nature of the animacy organization in human ventral temporal cortex

With: Daria Proklova

With: Daria Proklova,

Daniel Kaiser,

Marius Peelen

Summary: The animacy organisation in the ventral temporal cortex is not fully driven by visual feature differences (modelled with a CNN). It also depends on non-visual (inferred) factors such as agency (quantified through a behavioural task).

Publications: eLife'19 paper,

Masters Thesis

Comments: Masters thesis in brief,

eLife paper in brief

With: Rowan Sommers, Daniel Anthes, Tim Kietzmann

With: Rowan Sommers, Daniel Anthes, Tim Kietzmann With: Daniel Anthes, Peter König, Tim Kietzmann

With: Daniel Anthes, Peter König, Tim Kietzmann With: Johannes Singer, Radoslaw Cichy, Tim Kietzmann

With: Johannes Singer, Radoslaw Cichy, Tim Kietzmann With: Lotta Piefke, Adrien Doerig, Tim Kietzmann

With: Lotta Piefke, Adrien Doerig, Tim Kietzmann With: Surya Gayet, Marius Peelen, et al.

With: Surya Gayet, Marius Peelen, et al. With: Marius Peelen

With: Marius Peelen With: Giacomo Aldegheri, Marcel van Gerven, Marius Peelen

With: Giacomo Aldegheri, Marcel van Gerven, Marius Peelen With: Ilze Thoonen, Sjoerd Meijer, Marius Peelen

With: Ilze Thoonen, Sjoerd Meijer, Marius Peelen With: Adrien Doerig, Tim Kietzmann

With: Adrien Doerig, Tim Kietzmann With: Giacomo Aldegheri, Tim Kietzmann

With: Giacomo Aldegheri, Tim Kietzmann With: Adrien Doerig, Tim Kietzmann, et al.

With: Adrien Doerig, Tim Kietzmann, et al. With: Zejin Lu, Radoslaw Cichy, Tim Kietzmann

With: Zejin Lu, Radoslaw Cichy, Tim Kietzmann With: Jochem Koopmans, Genevieve Quek, Marius Peelen

With: Jochem Koopmans, Genevieve Quek, Marius Peelen With: Lu-Chun Yeh, Marius Peelen

With: Lu-Chun Yeh, Marius Peelen With: Genevieve Quek, Marius Peelen

With: Genevieve Quek, Marius Peelen With: Daria Proklova, Daniel Kaiser, Marius Peelen

With: Daria Proklova, Daniel Kaiser, Marius Peelen With: Victoria Bosch, Tim Kietzmann, et al.

With: Victoria Bosch, Tim Kietzmann, et al. With: Varad Choudhari

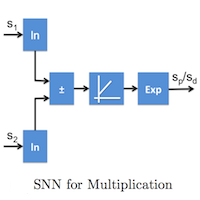

With: Varad Choudhari  With: Sukanya Patil, Bipin Rajendran

With: Sukanya Patil, Bipin Rajendran